How Batch Processing Is Changing In The Age of AI

Batch processing is workflow orchestration which executes units of work over a bounded set of inputs. It runs continuously—not just after hours—optimizing for correctness, completeness, and throughput. Today's batch processing coordinates multi-step, dependency-aware work across data, services, and environments, and can be triggered by events just as often as by schedules.

This approach is often called workload automation (WLA) or job scheduling, but these names don't capture how dramatically it has evolved. For many businesses, batch processing isn't a legacy technology—it's the backbone of their most critical operations, from payroll to AI model training.

In this article, we'll redefine what batch processing means today, examine how it complements stream processing rather than competing with it, and provide guidance on leveraging both approaches for maximum business impact.

The outdated definitions of batch processing reflect a business world that no longer exists. For decades, batch was defined by when it ran: after business hours, during overnight windows when systems were otherwise idle. This made sense when businesses closed at 5 PM and computing resources were scarce.

But modern businesses operate 24/7. Global operations, always-on digital services, and real-time customer expectations mean there are no "idle hours" anymore. The batch jobs that power invoicing, payroll, employee onboarding, and AI model training don't wait for nighttime—they run continuously throughout the day.

What has changed isn't whether batch processing is needed, but how we understand and define it. Modern terminology focuses on "workflow orchestration" rather than "overnight jobs." The distinction between batch and other processing methods is no longer about when you process, but why you process that way.

Today's batch processing has evolved to become:

Today's batch processing relies on sophisticated orchestration platforms that manage dependencies, triggers, and execution paths without constant human oversight.

Modern batch workflows can be initiated in multiple ways:

A workflow orchestration platform manages dependencies through flows which define:

Modern systems use exception-based management to notify stakeholders only when intervention is needed:

The goal is strategic human intervention: people are engaged when their expertise is needed for decisions, not for routine monitoring.

Batch processing should be considered when business processes require correlation of events over time, completeness of data, or high-volume throughput. The choice between batch and stream isn't about modernity—it's about matching the processing approach to business requirements.

Use batch processing when:

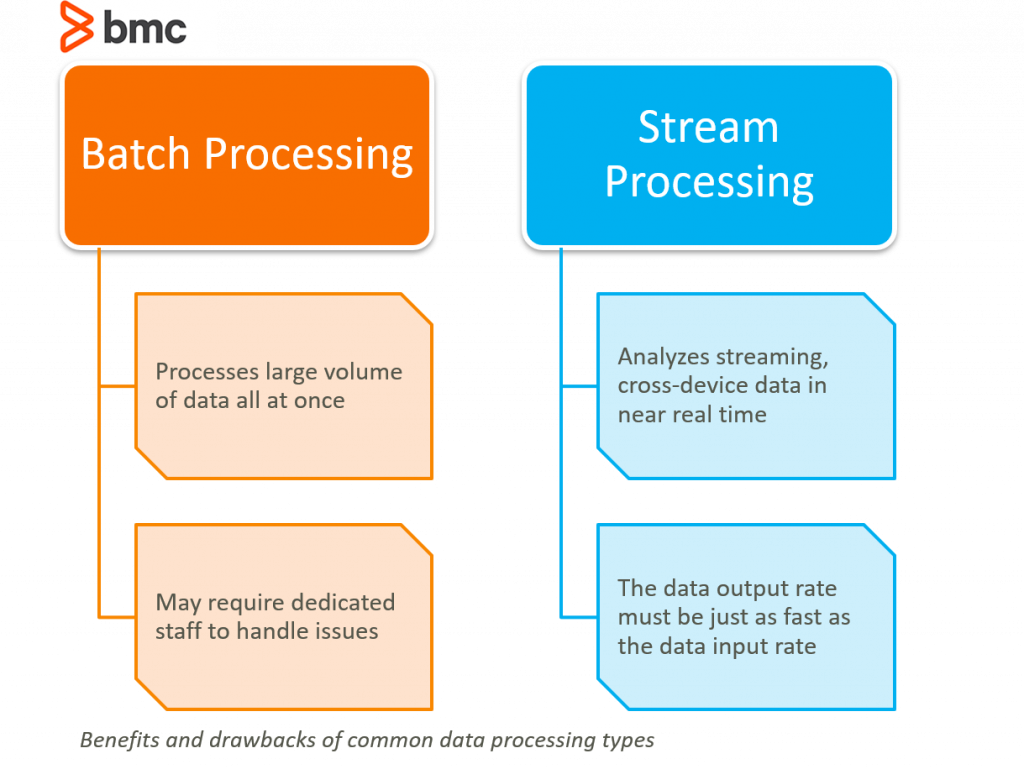

The most significant misconception about batch and stream processing is that they compete. In reality, they complement each other, each optimized for different business purposes.

Stream processing excels at acting on signals immediately: detecting fraud as it happens, responding to IoT sensor alerts, or triggering real-time personalization. These workloads process unbounded event flows with millisecond or second-level latency requirements.

But what happens after the immediate action? Consider fraud detection: stream processing catches the suspicious transaction in real-time and flags it instantly. Then batch processing orchestrates everything that follows—notifying stakeholders, blocking accounts, halting payments, opening investigation cases, reconciling related transactions, updating customer communications, and maintaining audit logs. These follow-up steps require correlation across multiple systems and must be completed reliably, but they don't all need to happen in milliseconds.

This pattern appears across industries:

The distinction isn't batch versus stream—it's understanding which business processes need immediate response (stream) and which need reliable, complete, correlated processing (batch).

Use stream processing when:

Use batch processing when:

Use a hybrid approach when:

Stream processing is generally more complex and more compute and resource intensive. If business processes rely on collecting data—even when that collection is relatively small—batch or workflow orchestration may be the better choice. When action is dependent on a series of events correlated over time, batch is the stronger approach.

The perception that batch processing is outdated couldn't be further from reality. The most modern, cutting-edge workloads in technology today depend heavily on batch processing.

Consider that OpenAI recently concluded a $300 billion deal with Oracle to purchase compute resources for model training. Large language models and generative AI represent the frontier of modern computing—and the way effective models are built, taught, and updated is through batch approaches.

There is no AI in use today that doesn't rely on and depend heavily on batch processing to:

Machine learning workloads are batch-intensive by nature: they require complete datasets, complex computations over bounded inputs, and iterative processing cycles. This is batch processing at its most sophisticated—and most essential.

Businesses rely on batch processing for their most critical operations, both internal and customer-facing:

Internal services:

Customer-facing services:

The benefit organizations gain from batch processing is straightforward: they can deliver the services they exist to deliver to their customers. The focus is not whether batch processing is needed, but how to enhance the level of automation, make it more efficient, and give it the ability to process ever-growing volumes of data as effectively as possible.

Batch processing excels at ensuring completeness and correctness because it works with bounded, complete datasets. This allows for:

For high-volume workloads, batch processing delivers better economics:

Without the overhead of maintaining constant low-latency infrastructure, batch systems can process millions of records efficiently.

Compared to real-time or stream processing, batch is significantly less complex:

Modern batch systems run 24/7 with minimal human intervention:

While batch processing delivers significant value, successful implementation requires attention to several factors.

Many organizations adopt a platform engineering model for their batch processing infrastructure. These teams focus on the technology itself—ensuring it's installed, maintained, up to date, and available. But they rely on users to make optimal use of the tool in business contexts.

This separation of concerns is critical: platform teams handle the care and feeding of the technology, while business, data, and application teams own their specific workflows and outcomes. Whether called self-service or another name, this model prevents bottlenecks and enables domain experts to leverage batch capabilities effectively.

The goal of automation is to minimize unnecessary human intervention, but artificial intelligence hasn't replaced human intelligence. Even in the most modern use cases for automation, there's a concept called "human in the loop" where AI might generate several options, then rely on a human to make the final decision.

The optimization isn't about eliminating humans—it's ensuring that human intervention is utilized and leveraged when needed, while avoiding reliance on it when it's not. This is especially true for:

When issues occur, teams need rapid access to relevant knowledge. One of the major areas of evolution today with generative AI and AI assistance is making humans more effective by giving them quicker and better access to both general public knowledge and institutional knowledge embedded within the organization.

The ideal is enabling AI to guide humans to the information they need as quickly as possible—surfacing relevant runbooks, linking to past similar incidents, and proposing potential root causes based on organizational history.

As with any platform, there's a learning curve involved in managing modern batch systems. Teams need to understand:

Organizations succeed when they invest in training, create clear runbooks, and build internal expertise rather than depending entirely on external consultants.

If you're wondering whether batch processing is the right approach for your organization, consider where you might apply workflow orchestration in your business operations. Are there gaps you could fill with better automation?

Common use cases include:

As a rule of thumb, if you find yourself regularly doing large computing jobs manually, or if you have critical processes that require reliable multi-step orchestration, the right batch processing platform could free up significant time and resources for your organization.

When deciding if your organization needs to invest in modern batch processing capabilities, ask:

Modern batch processing is workflow orchestration that runs continuously, handles your most critical business services, and powers cutting-edge AI workloads. It complements stream processing by handling the reliable, correlated, multi-step work that follows real-time detection.

The distinction between batch and stream is about business purpose, not technology fashion. Choose stream when a single event requires immediate action. Choose batch when actions depend on correlating events over time or when completeness, correctness, and throughput matter most. Use both together when you need fast detection and reliable follow-up.

Organizations that succeed with modern batch processing separate platform engineering from self-service usage, leverage AI assistance for troubleshooting, and ensure strategic human intervention where business judgment matters. They focus not on whether batch processing is needed, but on how to enhance automation, improve efficiency, and scale with growing data volumes.