How To Use & Manage Kubernetes DaemonSets

Kubernetes is a leading open-source engine that orchestrates containerized applications.

In this article, we will have a look at the DaemonSet feature offered by Kubernetes. We’ll walk you through use cases and how to create, update, communicate with, and delete DaemonSets.

(This article is part of our Kubernetes Guide. Use the right-hand menu to navigate.)

The DaemonSet feature is used to ensure that some or all of your pods are scheduled and running on every single available node. This essentially runs a copy of the desired pod across all nodes.

DaemonSets are an integral part of the Kubernetes cluster facilitating administrators to easily configure services (pods) across all or a subset of nodes.

DaemonSets can improve the performance of a Kubernetes cluster by distributing maintenance tasks and support services via deploying Pods across all nodes. They are well suited for long-running services like monitoring or log collection. Following are some example use cases of DaemonSets:

Depending on the requirement, you can set up multiple DaemonSets for a single type of daemon, with different flags, memory, CPU, etc. that supports multiple configurations and hardware types.

By default, the node that a pod runs on is decided by the Kubernetes scheduler. However, DaemonSet pods are created and scheduled by the DaemonSet controller. Using the DaemonSet controller can lead to Inconsistent Pod behavior and issues in Pod priority preemption.

To mitigate these issues, Kubernetes (ScheduleDaemonSetPods) allows users to schedule DaemonSets using the default scheduler instead of the DaemonSet controller. This is done by adding the NodeAffinity term to the DaemonSet pods instead of the .spec.nodeName term. The default scheduler is then used to bind the Pod to the target host.

The following is a sample NodeAffinity configuration:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

# Key Name

- key: disktype

operator: In

# Value

values:

- ssd

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

Above, we configured NodeAffinity so that a pod will only be created on a node that has the "disktype=ssd" label.

Additionally, DaemonSet pods adhere to taints and tolerations in Kubernetes. The node.kubernetes.io/unschedulable:NoSchedule toleration is automatically added to DaemonSet pods. (For more information about taints and tolerations, please refer to the official Kubernetes documentation.)

As for every other component in Kubernetes, DaemonSets are configured using a YAML file. Let's have a look at the structure of a DaemonSet file.

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: test-daemonset

namespace: test-daemonset-namespace

Labels:

app-type: test-app-type

spec:

template:

metadata:

labels:

name: test-daemonset-container

selector:

matchLabels:

name: test-daemonset-container

As you can notice in the above structure, the apiVersion, kind, and metadata are required fields in every Kubernetes manifest. The DaemonSet specific fields come under the spec section—these fields are both mandatory.

Now let's go ahead with creating a sample DaemonSet. Here, we will be using a “fluentd-elasticsearch” image that will run on every node in a Kubernetes cluster. Each pod would then collect logs and send the data to ElasticSearch.

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-elasticsearch-test

namespace: default # Name Space

labels:

k8s-app: fluentd-logging

spec:

selector: # Selector

matchLabels:

name: fluentd-elasticsearch-test-deamonset

template: # Pod Template

metadata:

labels:

name: fluentd-elasticsearch-test-deamonset

spec:

tolerations: # Tolerations

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers: # Container Details

- name: fluentd-elasticsearch

image: quay.io/fluentd_elasticsearch/fluentd:v2.5.2

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

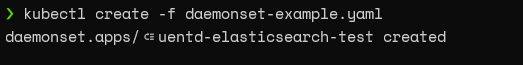

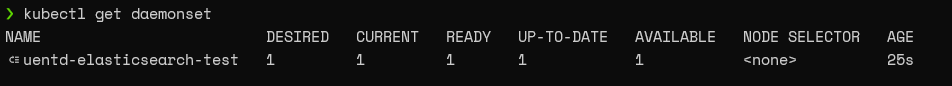

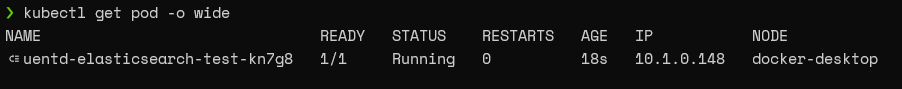

First, let's create the DaemonSet using the kubectl create command and retrieve the DaemonSet and pod information as follows:

kubectl create -f daemonset-example.yaml

kubectl get daemonset

kubectl get pod -o wide

As you can see from the above output, our DaemonSet has been successfully deployed.

As you can see from the above output, our DaemonSet has been successfully deployed.

Depending on the nodes available on the cluster, it will scale automatically to match the number of nodes or a subset of nodes on the configuration. (Here, the number of nodes will be one as we are running this on a test environment with a single node Kubernetes cluster.)

When it comes to updating DaemonSets, if a node label is changed, DaemonSet will automatically add new pods to matching nodes while deleting pods from non-matching nodes. We can use the "kubectl apply" command to update a DaemonSet, as shown below.

![]() There are two strategies that can be followed when updating DaemonSets:

There are two strategies that can be followed when updating DaemonSets:

These strategies can be configured using the spec.updateStrategy.type option.

Deleting a DaemonSet is a simple task. To do that, simply run the kubectl delete command with the DaemonSet. This would delete the DaemonSet with all the underlying pods it has created.

![]() We can use the cascade=false flag in the kubectl delete command to only delete the DaemonSet without deleting the pods.

We can use the cascade=false flag in the kubectl delete command to only delete the DaemonSet without deleting the pods.

There are multiple methods to communicate with pods created by DaemonSets. Here are some available options:

In this article, we learned about Kubernetes DaemonSets. These configurations can easily facilitate monitoring, storage, or logging services that can be used to increase the performance and reliability of both the Kubernetes cluster and the containers.